Contrastive Representation Learning

Contrastive Representation Learning is a selfsupervised learning framework that learns representations by contrasting positive pairs against negative pairs...

Contrastive Representation Learning🔗

Self-Supervised Learning🔗

Self-Supervised Learning for image recognition, particularly when referred to as Contrastive Representation Learning, is a powerful technique for learning useful features from unlabeled images by teaching the model to distinguish between similar and dissimilar images. This approach falls under the broader category of self-supervised learning, where the model generates its own supervisory signal based on the input data.

What is Contrastive Representation Learning?🔗

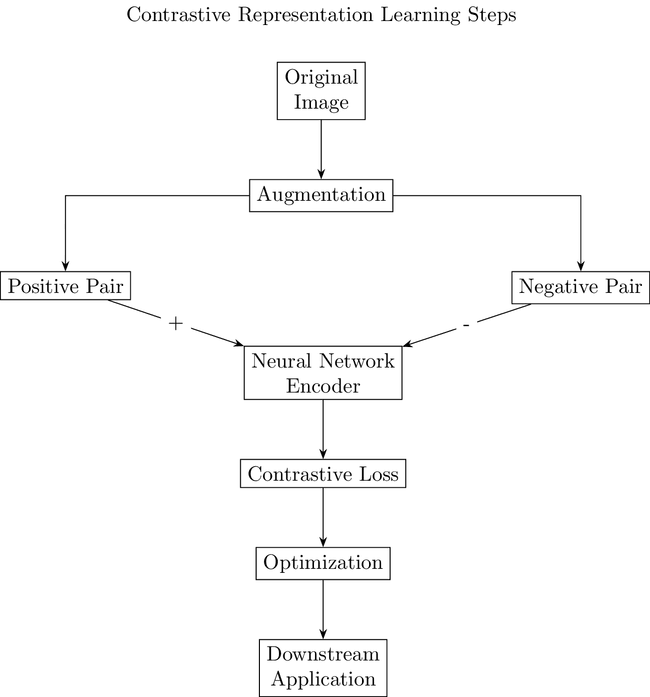

Contrastive Representation Learning is a self-supervised learning framework that learns representations by contrasting positive pairs against negative pairs. A positive pair consists of two different augmentations of the same image, while negative pairs are composed of augmentations from different images. The goal is to learn an embedding space where positive pairs are closer to each other, and negative pairs are farther apart.

How It Works: Step by Step Example🔗

Let's consider a simple example with a dataset of animal images without any labels indicating the animal types.

Step 1: Generate Augmented Images🔗

- Original Image: Start with an image of a cat.

- Augmentation: Create two augmented versions of this image (e.g., by applying random cropping, rotation, color jittering). These form a positive pair since they originate from the same image.

- Negative Sample: Select another image (e.g., a dog) from the dataset and perform similar augmentations to create a negative example, as it represents a different original image.

Step 2: Use a Neural Network to Learn Representations🔗

- Encoder Network: Pass both images of the positive pair and the negative image through a neural network encoder (e.g., a convolutional neural network) to obtain embeddings (feature vectors) for each.

- Objective: Optimize the network so that the embeddings of the positive pair are closer to each other than to the embedding of the negative example in the feature space.

Step 3: Define the Contrastive Loss🔗

- Loss Function: Use a contrastive loss function, such as the triplet loss or the Noise Contrastive Estimation (NCE) loss. A popular choice is the InfoNCE loss, which for a given positive pair, contrasts its similarity against the similarities of many negative pairs in the same batch.

- Mathematically, for a positive pair of embeddings and , and a set of negative embeddings , the InfoNCE loss may look something like:

where is a similarity measure between two embeddings (e.g., dot product), and is a temperature parameter that scales the similarities.

Step 4: Train the Model🔗

- Training: By minimizing the contrastive loss over many batches of images, the encoder learns to produce embeddings that bring augmentations of the same image closer together while pushing apart augmentations of different images.

- Result: The trained encoder network learns a representation of the images that captures their underlying structure and features, useful for distinguishing between different images without ever having seen labels for them.

Step 5: Applying the Learned Representations🔗

- Transfer Learning: The learned embeddings can then be used for downstream tasks, such as image classification, by adding a small labeled dataset to fine-tune the pre-trained encoder or by training a classifier on top of the frozen embeddings.

COMING SOON ! ! !

Till Then, you can Subscribe to Us.

Get the latest updates, exclusive content and special offers delivered directly to your mailbox. Subscribe now!